Ties, Smartphones, bags, etc.…

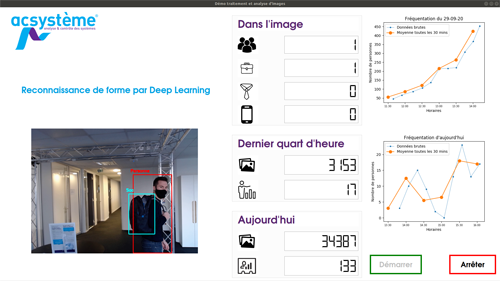

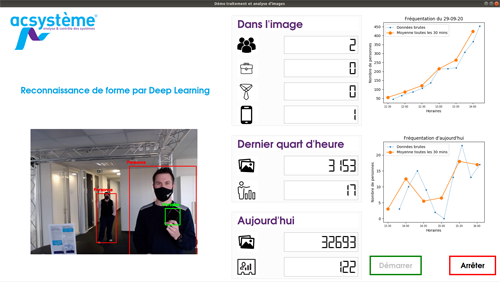

For this demonstrator, the objects we decided to identify are ties, Smartphones, bags (handbags, backpacks, shopping bags), and people. To do this, we chose the “Faster R-CNN” algorithm which makes it possible to segment the objects (isolate regions in the image containing the different objects that we are looking for). For optimal operation, this algorithm needs high-performance hardware resources, in order to obtain results within a reasonable time.

Detection every 2 seconds

To measure the attendance at a trade show effectively, we found it sufficient to have object detection updates every 2 s by exploiting the performance of the graphics card. Indeed, for most “Deep Learning” algorithms, accuracy comes from learning (training) on a large number of references requiring significant hardware resources to progress quickly. Moreover, the update frequency of the segmentation, within the video stream, requires resources to have a respectable refresh rate in the graphics interface.

Deep learning and data

In our case, the objects we chose to recognise have been learnt out of millions of items in order to be able to consider:

- the context: for example, a tie is better recognised if it is tied or a person can be detected from his hand,

- orientation changes,

- lighting changes.

Statistical analyses

The graphics interface, developed in Python, presents attendance statistics (number of people) over the last 15 minutes to provide “live” information and a curve over the day which is compared to the day before.